As technology becomes smarter, it must be humanized – designed to be more relatable, not more human.

“I’m sorry Dave, I can’t do that.”

That innocuous statement, when uttered by the HAL9000 in Stanley Kubrick’s 2001, A Space Odyssey, became a chilling symbol of artificial intelligence run amok. Long before today’s AI, science fiction writers like Arthur C. Clarke were painting a future where computers and robots would turn against us as soon as they achieved sentience.

Where others see dangers, we see opportunities to design a new story about how humans interact with intelligent agents.

With the continued growth of voice driven AI agents across all the major platforms — Apple’s Siri, Amazon’s Alexa, Microsoft’s Cortana, and others — artificial intelligence (AI) is becoming more mainstream. Consumers now have a name and even a voice to associate with these capabilities. Yet, the current experiences have yet to fulfill the full promise of AI’s potential. While these agents are created to sound friendly, they have not yet attained the subtle touches and nuances that are essential to feeling human.

Though the current gaps expose the current shortcomings of the technology, they also suggest a need for some strategic principles to guide the design of these experiences. Through our work in creating user experiences for self-driving vehicles, smart appliances and AI agents, we have gleaned insights into the most effective ways to humanize artificial intelligent experiences.

01

Imitation feels fake, relatability feels real

Prioritize relatability to humans over literal imitation of human appearance and conversation.

One of the first mistakes we make as we try to humanize technology is to pursue literal expressions of human features – human body/face, human sounding voice and human-like personality. It turns out that mimicking human characteristics can often have the unintended effect of alienating us. Instead of imitation or replication, relatability turns out to be a superior yardstick for making technology feel human.

A smart phone has an endearing quality to it in part due to the dependency we place upon it and the intimate place it holds in our daily lives. Yet, it does not look or act like a human being. Engagement is developed through relationships.

Being relatable is all about the dynamics and qualities of relationships. Relationships are dynamic and multidimensional. Relationships develop over time as trust and insight increase. The most valuable relationships are ones in which people feel the most comfortable, most understood and the most empowered. Intelligent voice assistants or agents should be created with these relationship contexts in mind. It is important to seek to establish a symbiotic relationship between a digital agent and its user,a relationship that shows respect and understanding of the needs and context of each other. People should see technology as a collaborator, a partner, a companion and not a rival or servant.

02

To make it more human, look beyond the human

Find ways to respond and emote that are not one-to-one imitations of human behavior.

A cat’s purr has no real analog in human behavior, yet everyone believes a cat is expressing connection and affection when it purrs. We are capable of reading all kinds of emotions into our pets sounds and gestures because we anthropomorphize their behavior. Similarly, R2D2 from Star Wars has garnered a lot of empathy and love from fans without ever smiling, crying, or laughing.

The lesson for designers is that we should broaden our search as we build our lexicon of gestures, behaviors, and sounds. Some of the most endearing characters are non-human in form yet recognizably human. Humans have developed an unspoken set of rules around what are appropriate ways to express emotions to each other in different settings. As long as our creations don’t violate those rules, they may incorporate traits borrowed from animals or made up out of whole cloth and we will embrace them warmly.

03

Let the user be human

Allow your user to fluidly interact with the system using conversational language and familiar references.

When designing an AI you are also designing the way a user interacts with the AI. Keeping human-centered design principles in mind, the ideal experience is to allow users to act naturally, using language and intonation they would normally use. Don’t make users speak like a computer.

Many interactions with AI will be task oriented, but how users make requests will vary. This is an opportunity for the AI to connect the dots, and be aware of what the user will need and surface that information, rather than putting the user in the position of having to operate like a computer and lead the AI to each dot. For example, if a user is initiating a task related to an appointment, do not make the user ask to see her/his calendar. AI can take the initiative in surfacing the calendar for the user in anticipation of the user’s needs.

04

Respond Flexibly

Avoid repetition by allowing the system to adapt its response to user requests.

A three-year-old child will repeatedly ask the same question when s/he does not understand a response. So it’s understandable that early architectures of artificial intelligence also exhibit this behavior. But one of the quickest ways an agent’s algorithmic underpinnings are exposed is when it begins to repeat itself or responds with an error message. However, as people mature they quickly learn that in order to elicit an understandable response, varying the framing of their questions is more effective, as well as, less frustrating for everyone involved. The key component of a relatable agent is the adaptive, varied way it achieves understanding. When designing an agent with a relatable user experience seek to construct adaptive responses that evolve.

05

To err is human — great AI is no different

Allow the system to express enough self-awareness to indicate it understands when it is getting things wrong, and that it wants to improve.

Imagine training a puppy: You are willing to repeat a command multiple times in a row while the puppy learns before you give up trying to teach it a new trick. Even though the puppy doesn’t get it right the first few times, there is something about your perception of the puppy in this stage that allows you to permit a higher threshold of tolerance for mistakes. You may even go as far as considering the relative complicated nature of the trick compared to the puppy’s other abilities when gauging your patience.

The user-agent relationship can tap into this phenomenon if the right expectations are communicated, and the agent can express the humble nature of sincerely trying to learn. The user will then be more willing to raise their threshold of acceptability to participate in steps that will allow the agent to improve.

06

Consistency is the key to trust

Users are more willing to continue engaging with an agent or system when they know what to expect.

Humans are famously inconsistent in their behavior, but your AI agent can’t be. Unexpected or volatile responses and behaviors degrade the user’s trust that the agent will behave appropriately in certain settings. It will also create frustration and roadblocks for the user to accomplish tasks, resulting in less of a reason for the user to invest in using the agent.

The use of a metaphor when designing the behaviors and personality of an agent is an exceptional way to bake-in what the user can expect based on prior exposure to the subject of the metaphor. This does not require a 1:1 mapping of the metaphor, but a higher level alignment of expectations. Personality is another tool for establishing expectations. People’s prior experience with a personality sets their expectations of what to anticipate at a more granular level than the mapping of the metaphor.

07

Anticipate, but never assume

The agent should be able to supply help — without overreaching in its assumptions.

There is a fine line between helpful and meddlesome. If you want users to welcome agents into their lives, you need to design your AI to anticipate needs without assuming too much. Walking this line is never easy. Hello Barbie™ is an excellent example of an agent that pushes assumptions a bit too far, by always redirecting the conversation to fashion. This assumes too much — exposing the agenda of the maker — rather than teasing out the user’s desires and appropriately personalizing their experience.

08

Leverage context in communication

Ensure that agents engage more naturally, by leveraging contextual cues

People learn to use contextual cues to inform their responses and reactions as they grow and develop social skills. In order for users to find an agent relatable, the AI will need to develop similarly through experience. By combining contextual data from device sensors, and past behavioural insights, AI agents can learn patterns to continually improve the experience.

A thought experiment can help clarify our understanding of how we, as humans, communicate. Imagine, that you are sitting in your living room with a friend, and you raise the subject of going out for dinner. Your friend uses contextual cues to calculate their response. Your friend knows the places you both like, what time it is, if you need something quickly, what your past experiences have been. Your friend also picks up on more subtle cues from the context. She notices the expression on your face, if your breathing is abnormally fast, if you sound exceptionally celebratory, etc. Being capable of combining all of these cues from the context allows your friend to respond in a way that is relevant, engaging, and inspires continued discussion.

09

Great personas are multidimensional

Agents should be created with flexible modes to align to different relationships, roles and users.

How we speak to a child is different than how to talk to our boss. Our exuberance at a happy hour stands in stark contrast to our demeanor at a funeral. This ability to vary our persona gives us complexity and makes us interesting. AI agents need to be multidimensional, too. If the agent engages with a child, for example,the system should adjust tone and possible levels of autonomy vs. how it interacts with a parent.

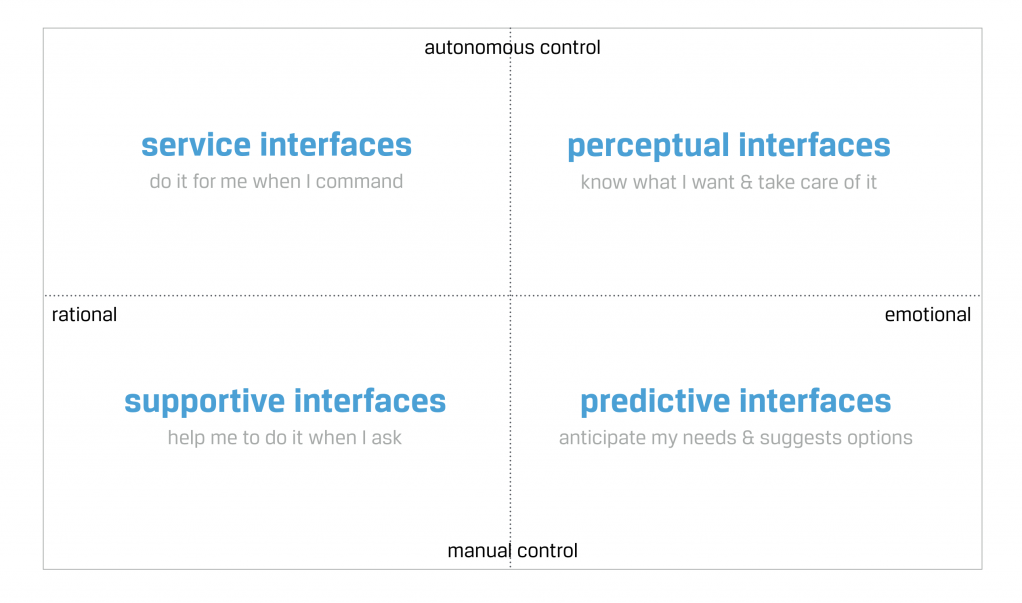

For the most optimal agent experience, determine the different relationship roles by which the agent will engage with users. In our work, we have created a simple framework that maps the relationship roles according to degree of control and warmth of relationship. Below is an example of a model that we have leveraged in the creation of smart appliance agent experience.

Conclusion

If you add up all of these insights, a portrait of human AI design emerges that is as complex, contradictory and counterintuitive as human nature itself:

- To gain your trust, AI agents must be consistent — but AI agents must also be flexible enough to vary their personas depending on context.

- Agents are most helpful when they anticipate users’ needs — but assume too much and they become annoying.

- The more AI mimics human forms or personas, the more it alienates.

There’s a certain je ne sais quoi that pervades our sense of what makes us human. We have a hard time defining it but we definitely know it when we see it. If there’s a single theme that runs through all of our findings, it’s that AI works best when we find it relatable — when it feels natural, unforced and familiar. Sounds simple but achieving that relatable quality involves a complex alchemy of design, intention and serendipity. Although AI is still in its infancy, it’s evolving fast. Building in adaptability and flexibility means your AI will feel more human now and evolve as it interacts with us.

A Punchcut Perspective

Chelsea Cropper, Interaction Designer

Ken Olewiler, Principal Managing Director

Contributors: Dave Schrimp, Nate Cox, Jared Benson